In today’s data-driven world, having clean, complete datasets is essential for practical analysis and decision-making. However, real-world data often contains missing values due to various reasons such as sensor failures, human error, or privacy restrictions. Handling these missing values is a crucial step before any meaningful analysis can be performed. This is where data imputation techniques come into play — methods to fill in missing data points intelligently to preserve the dataset’s integrity.

For those pursuing a data analyst course in Pune, understanding different imputation techniques and their strengths and weaknesses is vital. In this blog, we will explore and compare three popular data imputation techniques: MICE (Multiple Imputation by Chained Equations), KNN (K-Nearest Neighbours), and Autoencoders. Each method brings unique advantages and challenges depending on the type of data and the missingness pattern.

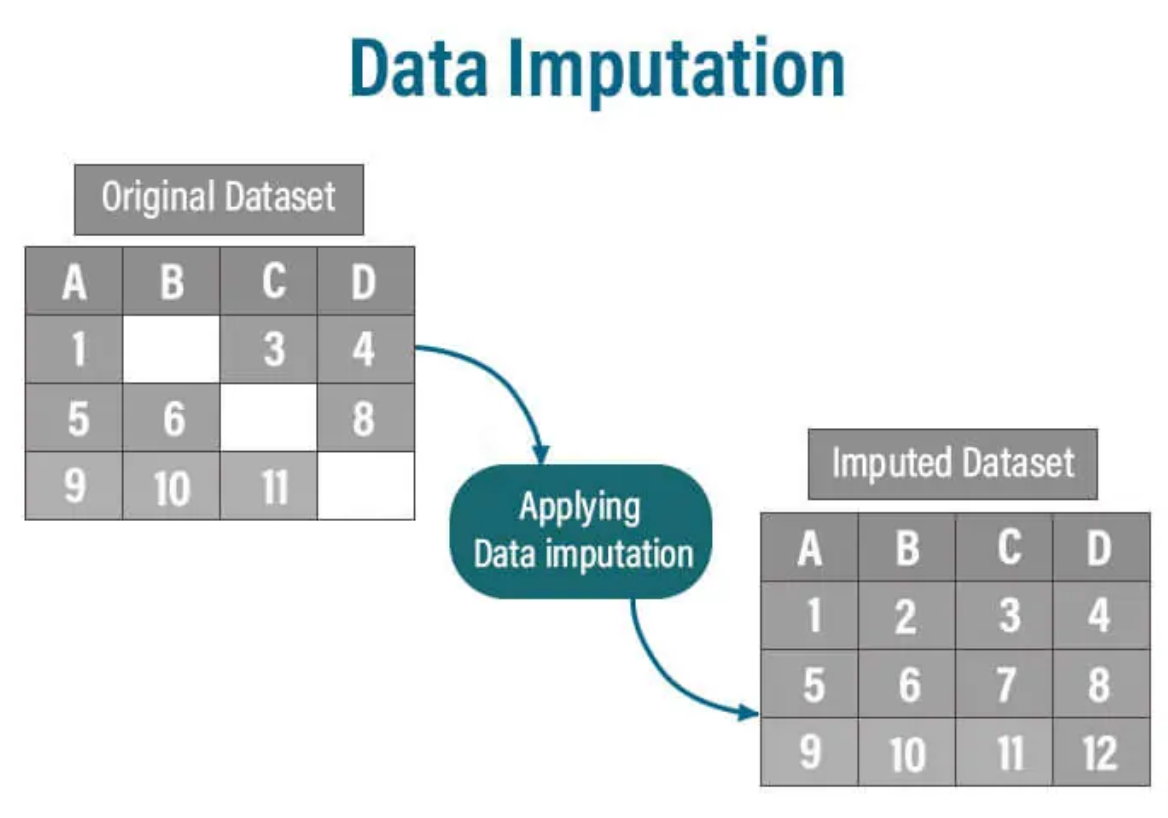

What is Data Imputation?

Data imputation refers to the process of replacing missing or null values in datasets with substituted values, allowing analysts and machine learning models to utilise the data fully. Without proper imputation, missing values can bias the results or reduce the dataset’s usability.

Missing data can be classified as:

- MCAR (Missing Completely At Random): Missingness is unrelated to the data.

- MAR (Missing At Random): Missingness depends on observed data.

- MNAR (Missing Not At Random): Missingness depends on unobserved data.

Choosing an appropriate imputation method depends on the data type, missingness mechanism, and analysis goals.

1. MICE (Multiple Imputation by Chained Equations)

MICE is one of the most popular statistical imputation methods, especially effective when data is missing at random (MAR). It models each variable with missing data as a function of other variables and performs multiple rounds of imputations iteratively.

How MICE Works

- Initially, missing values are filled with simple initial guesses (e.g., mean or median).

- For each variable with missing data, a regression model is built using other variables as predictors.

- Missing values are imputed using the regression model.

- This process is repeated for multiple iterations (chained equations) to stabilise imputations.

- Finally, multiple complete datasets are generated, and analysis is performed on each, with results combined for accuracy.

Advantages of MICE

- Handles different variable types (continuous, categorical).

- Captures complex relationships by modelling variables conditionally.

- Generates multiple imputations accounting for uncertainty, improving statistical inference.

Disadvantages of MICE

- Computationally intensive for large datasets.

- Requires careful model specification for each variable.

- Can be sensitive to model misspecification or outliers.

2. KNN (K-Nearest Neighbours) Imputation

KNN imputation is a simple, non-parametric method that imputes missing values by referencing similar observations in the dataset. The idea is that data points close to each other in feature space are likely to have similar values.

How KNN Works

- For each data point with missing values, find the k nearest neighbours based on non-missing features.

- Impute missing values by averaging (for continuous variables) or using the most frequent value (for categorical variables) among the neighbours.

- Distance metrics like Euclidean or Manhattan distance are typically used.

Advantages of KNN

- Easy to understand and implement.

- Works well when similar observations exist.

- No assumptions about the data distribution.

Disadvantages of KNN

- Computationally expensive with large datasets due to distance calculations.

- Sensitive to the choice of k and the distance metric.

- Performance deteriorates when missing data is extensive or when neighbours are not truly similar.

3. Autoencoders for Data Imputation

Autoencoders are neural network models designed for unsupervised learning of efficient data codings. Recently, they have gained popularity for imputation tasks due to their ability to learn complex, nonlinear data representations.

How Autoencoders Work for Imputation

- An autoencoder consists of an encoder that compresses input data into a latent representation and a decoder that reconstructs the original data from this representation.

- During training, the model learns to minimise reconstruction error, effectively capturing the data distribution.

- To impute missing data, the autoencoder is trained on incomplete data with masking strategies or complete data.

- The trained model predicts missing values by reconstructing inputs, filling in the blanks with learned patterns.

Advantages of Autoencoders

- Capable of capturing nonlinear relationships and complex feature interactions.

- Scales well with large datasets.

- Flexible architecture allows integration with other deep learning techniques.

Disadvantages of Autoencoders

- Requires substantial training data and computational resources.

- Model tuning (architecture, learning rate) can be challenging.

- Interpretability is lower compared to traditional methods like MICE.

Comparing MICE, KNN, and Autoencoders

| Aspect | MICE | KNN | Autoencoders |

| Type | Statistical, regression-based | Instance-based, similarity-driven | Deep learning, representation-based |

| Assumptions | MAR assumption, linear or logistic models | No distribution assumption | Requires training data to learn distribution |

| Handling data types | Mixed (categorical, continuous) | Mixed, but better for continuous | Mainly continuous, can be adapted |

| Computational cost | Moderate to high | High for large data | High, requires GPUs for large datasets |

| Robustness to outliers | Moderate | Sensitive | More robust due to learned features |

| Scalability | Moderate | Poor for large datasets | Good scalability |

| Ease of implementation | Moderate to advanced | Simple | Complex, requires ML expertise |

| Imputation quality | Good for MAR, uncertainty quantification | Good for local similarity | Potentially superior with complex data |

If you want to deepen your understanding of data handling and imputation, consider enrolling in a comprehensive data analyst course that covers these advanced preprocessing techniques, preparing you for real-world data challenges.

When to Use Which?

- MICE is recommended when your dataset has mixed types of variables, and missing data is assumed to be MAR. It’s highly effective for traditional statistical analysis and provides a principled way to incorporate uncertainty in imputations. This technique fits well within the learning objectives of a data analyst course in Pune, emphasising understanding regression models and statistical principles.

- KNN is suitable when data points naturally cluster or have close neighbours with similar characteristics. It’s a straightforward technique for smaller datasets or when you want a quick baseline imputation method without heavy modelling.

- Autoencoders excel with large, complex datasets, primarily when nonlinear relationships between features exist. If you have access to sufficient computational resources and data, autoencoders can provide state-of-the-art imputation results. This approach is increasingly relevant in advanced data analyst roles and machine learning-focused data analyst courses.

Practical Tips for Implementing These Techniques

- Always perform exploratory data analysis (EDA) to understand the nature and pattern of missing data before selecting an imputation technique.

- Validate imputation results using cross-validation or holdout datasets to avoid overfitting.

- Combine imputation with feature engineering and scaling to improve downstream model performance.

- When possible, use domain knowledge to guide imputation choices and evaluate if the imputed values make practical sense.

Conclusion

Data imputation is a fundamental step in preparing datasets for analysis, and selecting the proper technique can dramatically impact your results. MICE, KNN, and Autoencoders represent three distinct approaches — from statistical modelling to similarity-based to deep learning methods — each with its strengths and use cases.

For aspiring data analysts and professionals, gaining hands-on experience with these imputation methods is invaluable. Whether you are enrolled in a data analyst course or pursuing advanced analytics training, mastering these techniques will empower you to handle missing data effectively and deliver high-quality insights.

By understanding when and how to apply MICE, KNN, or Autoencoders, you can ensure your datasets are complete, reliable, and ready for analysis, leading to better-informed decisions and impactful outcomes in your projects.

Business Name: ExcelR – Data Science, Data Analytics Course Training in Pune

Address: 101 A ,1st Floor, Siddh Icon, Baner Rd, opposite Lane To Royal Enfield Showroom, beside Asian Box Restaurant, Baner, Pune, Maharashtra 411045

Phone Number: 098809 13504

Email Id: enquiry@excelr.com